¶ What is the AI Toolbox?

While there are a number of AI tools and toolboxes available, industrial applications pose new challenges to ready solutions. The goal of the AIMS 5.0 AI Toolbox is not to replace existing toolboxes -- rather, it extends existing solutions to provide information exchange and knowledge base to industry in order to guide and supervise the design and development process of industrial AI applications.

To support AI service design and implementation, the AIMS 5.0 AI Toolbox has some well-defined objectives, as follows:

- Reliability and security Describing best practices helps the designers to enhance reliability.

- Decrease the time-to-market By providing design templates and samples, reusable and compatible tools, the time-to-market can be decreased by fast prototyping.

- Prompt evaluation To test industrial proof-of-concepts, the toolbox provides tools which can be easily evaluated.

- Composition The AI Toolbox collects tools which can cooperate with each other through standard interfaces in order to be composed to implement complex applications. Composition helps to achieve better re-usability of AI tools.

- Share knowledge The toolbox provides means to exchange and share valuable knowledge and best practices.

- Extensive support The AIMS 5.0 AI Toolbox supports natively any existing AI tools and toolboxes.

¶ AI Toolbox scale levels

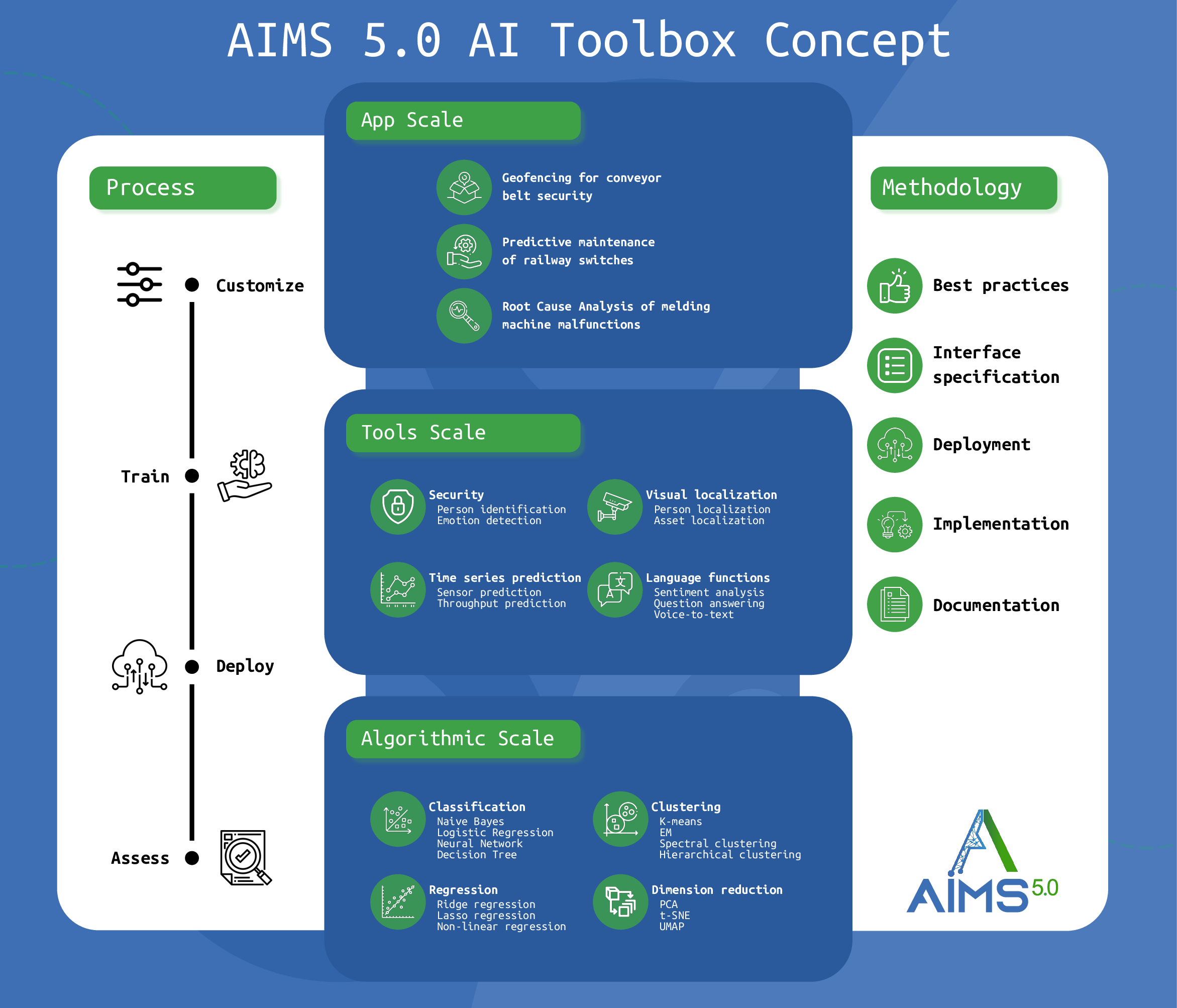

The figure below shows the main concept of the AIMS 5.0 AI Toolbox. There are four levels of AI Service scale, differing primarily in granularity. The three implementation based levels are supported by the fourth -- mostly theoretic -- methodology scale. In the following, the four levels are presented more deeply and compared to the objectives and requirements.

¶ Application scale

Application scale is the highest scale level of AI services, where the AI services provide solutions for complete use cases, e.g., a whole predictive maintenance service specifically for railway switches. The aim of the AIMS 5.0 AI Toolbox is to reach application scale by composition of reusable and composable tools.

¶ Tools scale

Tools scale is a compromise between algorithmic scale and app scale. Tools basically provide applied AI methods, which are commonly one or more AI models combined with some inner logic to solve a recurring problem. E.g. localization of assets in a factory consists of a couple of small steps, but the problem arises in numerous use cases. Tools scale can provide excellent reusable scale and support rapid development. Also, careful design of service interfaces can provide good composability.

¶ Algorithmic scale

The level of Algorithmic scale is granular since well-known AI and ML algorithms are implemented as AI services. Algorithmic scale is mostly about choosing the best service interfaces to provide composable services. However, using pure algorithms -- while being highly re-usable -- requires great effort to implement complex use cases. Also, using pure AI algorithms requires great knowledge of AI methods and techniques, which is against rapid development.

¶ Methodology scale

Being on a theoretical scale, the methodology provides specifications, requirements, and best practices for implementing AI services. However, while the Methodology scale can also be considered an independent level of AI service scales, it is best to imagine it as a basis of all other service scale levels since it can provide means to fulfill all the objectives and requirements of AI Toolbox services.

¶ AI Toolbox lifecycle

The lifecycle of AI services shares the very same four steps. To provide re-usable services, the customization step makes it possible to tailor the service to special needs. This includes e.g. setting of the parameters. The next step is optional, however, most algorithm requires training to be able to perform specific tasks, e.g., detecting uncommon objects in an image. Training can be complex and shall support widely spread frameworks to efficiently accomplish fine-tuned models. Deployment is a crucial point in the lifecycle, since models require variable resources or perhaps massive parallelization. Also, standard solutions for deployment, containerization and extendability to industrial systems (e.g. the Arrowhead Framework) are common requirements.

¶ AI Toolbox design

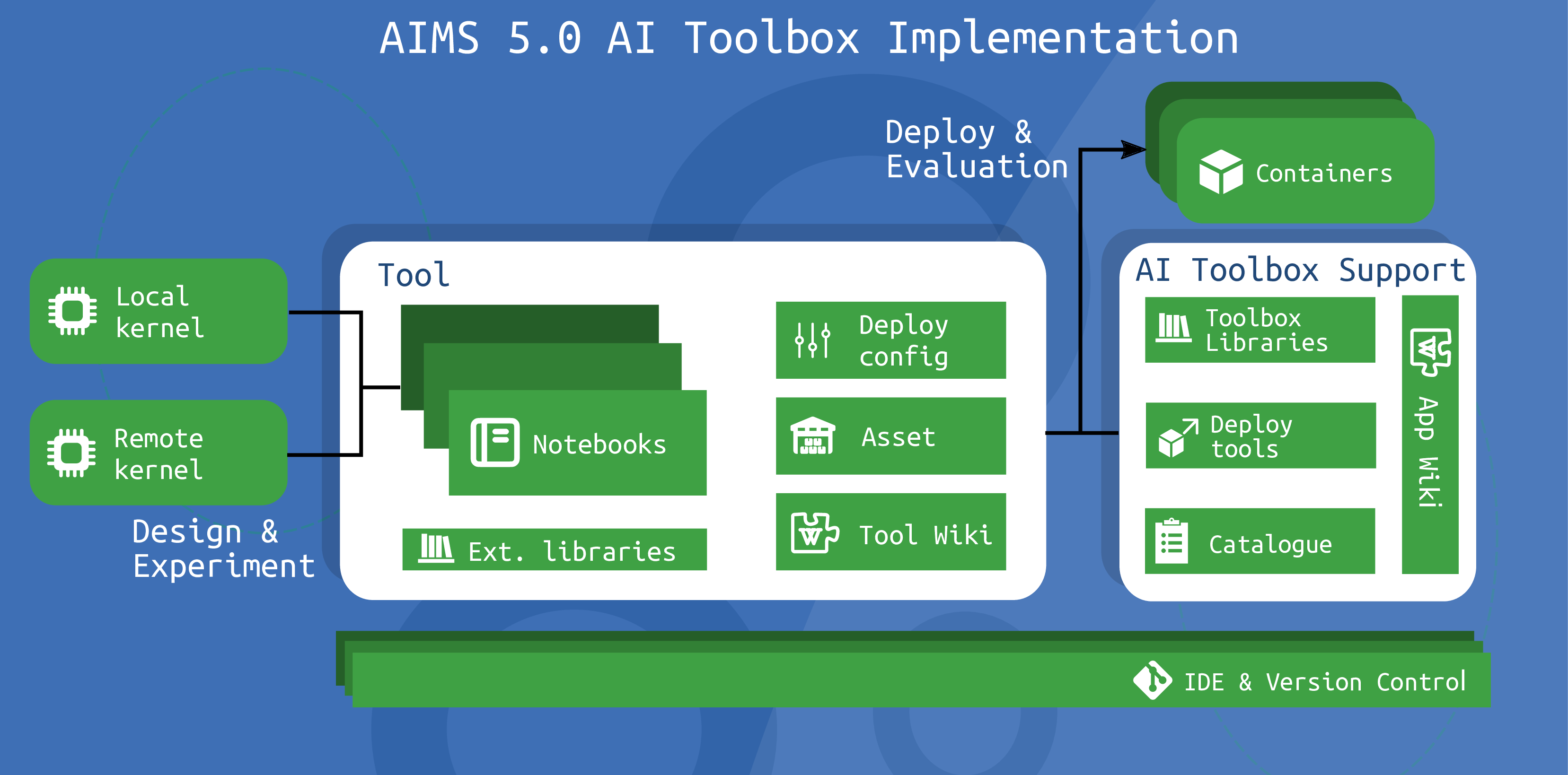

The previous section introduced the main objectives and motivations of the AI Toolbox. This section presents the fundamental requirements for the AI Toolbox, examining how these ideas can be implemented and outlining the broad architectural framework that should characterize the AI Toolbox. The figure below presents the high-level architecture, including the building blocks of the tools and the AI Toolbox Support. Contributors to the toolbox are responsible for supplying tools, and their role includes providing ready-to-test notebooks along with necessary supplementary information and files, incorporating best practices related to the topic and the actual implementation. The notebook format is particularly advantageous for supporting proof-of-concept development, offering essential tools for interactive development. Additionally, the AI Toolbox Support role involves consolidating these notebooks into a Tool catalogue and Application Wiki, while also providing the necessary support and libraries for the tool deployment.

¶ AI Toolbox Support building blocks and implementation

Arguably, one of the key goals of the AI Toolbox is to minimize time-to-market, emphasizing the swift and uncomplicated deployment of proof-of-concept applications and tools. Simplicity entails the availability of pre-existing example tools that can be readily employed, either as-is or with minor adjustments. We have defined 4 building blocks that will form the AI Toolbox Support:

- Catalogue Applications consist of tools chained together. Catalogue contains the list of available tools already included in the AI Toolbox and their usage, categorized by field of application.

- Deployment tools Prompt evaluation of AI Tools requires actions to seamlessly deploy tools as services. The primary method for deploying these notebooks as services involves containerized services a well-defined interface. However, this can be expanded to include various deployment scenarios for cutting-edge industrial frameworks, such as Arrowhead. For the deployment of implemented tools as services, the AI Toolbox requires deployment tools that utilize the deployment configuration specified by the tools.

- Toolbox libraries An essential requirement to align with the philosophy of the AI Toolbox is the ability to deploy these notebooks across various architectures without the need for code rewriting. These libraries handles the interface compatibility and management for the tools, as the responsibility for handling interface integration lies with the AI Toolbox.

To underpin this entire concept, a flexible and open-source Integrated Development Environment (IDE) is crucial. This IDE should have the capability to run notebooks, even in remote settings. Moreover, there are instances where deploying large AI models locally may not be feasible, or multiple notebook instances may need to utilize the same model, making remote running essential. These requirements serve as the foundation for operating and developing AI-based tools and industrial applications. Additionally, version control emerges as another indispensable requirement for the AI Toolbox, for which the well-proven solutions (e.g. Git, Subversion, Mercurial, etc.) are employed.

¶ Tool building blocks

In this section, we outline the five fundamental building blocks that form the core components of the tools. These elements represent what we believe a collaborator should incorporate into the tools to contribute to the AI Toolbox, as detailed below:

- Notebooks The AI Toolbox builds around the concept to use notebook-based tool implementations from the contributors. The notebook format is in line with the proof-of-concept applications as they provide excellent framework to test new ideas fast. If the notebook meets certain requirements, they can be deployed immediately.

- Deployment configuration In order to deploy the notebooks as services the tools should provide the necessary parameters for as a form of deployment configurations according to the Application Wiki description.

- External libraries These libraries are necessary for implementing your solutions, and they come pre-written by other developers. However, bare reference to external libraries is also sufficient.

- Asset These contains any external data sources which are necessary for a certain tool, i.e. images for an object detection tool or a text document for a context injection tool.

- Tool Wiki This is the most important building block of a tool, a Wiki page containing the best practices about and experiences about the tool and the application where this tool can be used.